Systems Engineering: the Missing Glue for AI Delivery

Old Unified Process lessons enable breakthrough AI delivery

This post is the start of a series that will enumerate how systems engineering approaches, married with agile and lean techniques, enable AI delivery so it can meet or exceed strategic organizational needs.

Does Low-Risk Delivery Extend to AI Solutions?

Over the last 20 years, solution delivery has followed well-worn architectural approaches with mature, proven tooling. Simultaneously, agile delivery has become the industry best practice for software delivery lifecycles (SDLCs). Agile is easier when working on a known problem set with established architectural patterns and tooling. As a result, typical systems leverage a minimalistic approach to architectural diagrams and documentation. These systems simply follow industry norms, leveraging their team’s prior experience and opinionated tooling approaches to serve as their de facto architecture and systems engineering process. However, as Artificial Intelligence (AI)-enabled solutions become standard, they bring with them a new set of challenges that do not follow the same norms as these traditional systems.

AI Brings New Delivery Challenges

Challenge 1 - Prototypes are not Minimally Viable Products (MVPs)

Many modern projects want to leverage lean principles to produce and release MVPs. MVPs, like prototypes, are lightweight solutions with just enough done test product/feature assumptions. However, MVPs and prototypes differ in their typical quality levels. Prototypes tend to have minimalistic quality characteristics while MVPs follow quality best practices that enable them to serve as foundational, albeit minimally viable, software. To put it another way, since MVPs are built to serve as the re-usable foundation of the final solution, a much greater emphasis is put on establishing high quality standards and minimizing defects. In contrast, prototypes typically embrace more of a “just get it to work” mentality. As noted by Eric Ries, a central figure in popularizing MVPs, in his book The Lean Startup, “Defects make it more difficult to evolve the product. They actually interfere with our ability to learn and so are dangerous to tolerate in any production process”. Care must then be taken to evolve our AI prototypes into MVPs to serve as a solid foundation for subsequent delivery.

The Data Science approaches that underpin AI systems are inherently experimentation driven. These efforts typically leverage Data Scientists to explore available data and determine what machine learning (ML) techniques might be applicable. Next they then validate these approaches using data science “notebooks” - effectively scripts with embedded documentation and charting. While extremely effective at capturing the thought process of the experiment and the evidence that led to a desired ML approach, notebooks are not as well suited to apply industry best practices that software engineering treats as foundational (e.g., object orientation, testing, modular design). The result is akin to a true prototype that demonstrates the right concept but is too fragile to build upon.

SDLCs are in part intended to help ensure projects are making efficient delivery decisions. As such, it is reasonable to think that a project’s SDLC would help prevent prototype-level code from being deployed in a production capacity. As noted earlier, however, modern agile systems have been honed on boilerplate tools and approaches, leaving them ill prepared to provide the checks and balances needed to prevent the promotion of prototypes into production. As a result, issues around concurrency, regression, and scale often creep into and undermine these prototype AI systems – both in their current and future releases.

Challenge 2: Machine Learning is a Specialized Skill

On AI projects, Data Scientists and Machine Learning Engineers (MLEs) are often asked to be specializing generalists who specialize in ML, but also generalize in software delivery. It’s common to see notebook solutions put into production with minimal testing or have ML solutions developed on a shared cloud server that team members login into and change without version control. Data Scientists and MLEs are extremely bright individuals who have excellent expertise in their field. However, their field interacts with software delivery, and they are often asked to play their core role as well as that of Data Engineer, Software Engineer, and/or DevOps engineer with little to no training in these fields. If we flipped the script and asked Data Engineers to perform Data Science, the issues would be similar in the inverse direction.

The combination of these challenges leads many projects to a blind risk: treating AI-enabled applications with the same low risk level of more traditional, tried and true applications.

Lowering AI Delivery Risk with Systems Engineering

Before open source frameworks brought commonality across solutions, there was substantial variation in the industry. These differences were managed with robust systems engineering approaches.

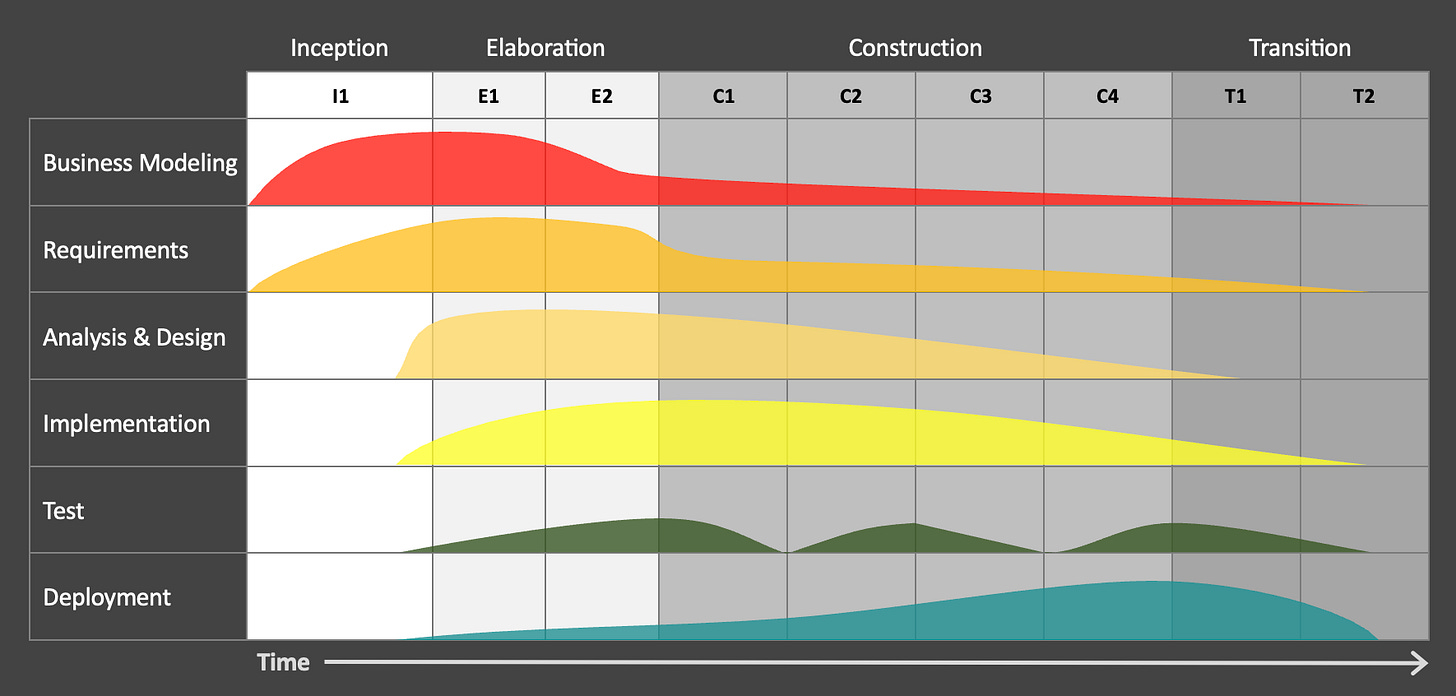

Arguably, the most well-known systems engineering approach was the Rational Unified Process (RUP). RUP might be most famous as the SDLC that moved projects away from waterfall or spiral (e.g., mini-waterfall) approaches. RUP split iterations (effectively sprints, in today’s parlance) across four phases: Inception, Elaboration, Construction, and Transition. At a very high level, each phase would contain one or more iterations with the following focus:

Inception - define scope and proposed architecture

Elaboration - implement proposed architecture to validate it is executable; generally lower high-risk items to an acceptable state

Construction - build the system

Transition - deploy into production

These phases, along with RUP’s six disciplines are famously depicted in the RUP Hump Chart to visualize a common example of how a team would focus on different disciplines during a specific release.

Experiment Before Committing to Architectural Approach

RUP reduces technical risk by first defining the intended architecture based on high level requirements and core use cases at the end of the Inception phase. This architectural plan was known as a candidate architecture; how you believe the system will be architected but without proof that it will work effectively. The Elaboration phase is laser focused on implementing your candidate architectures against real use cases to validate that it can serve as the basis for the release’s remaining technical work.

Candidate architectures are a natural fit for hardening Data Science’s exploratory nature because it takes a high-level plan crafted by experts and proves that it is fit for purpose. This is exactly the approach needed to field a production AI approach at a high level:

Inception: explore data, determine most likely AI approach

Elaboration: validate AI approach in notebook

Construction: harden notebooks into traditional software components

Transition: deploy into production with drift detection

A crucial feature in this approach is that sometimes candidate architectures fail. RUP handles this by allowing multiple elaboration-focused iterations, if needed, to reach a validated candidate architecture. This situation is also common in Data Science, where chosen models sometimes don’t fit available data as well as expected.

Systems Engineering Melds Data Science and Software Approaches to Set Common Team Expectations

A large portion of what is delivered in AI-enabled efforts today uses prototyping techniques that are only appropriate for risk reduction purposes. By blending in a common systems engineering approach, we can help align project expectations across all project roles to deliver high quality solutions at a more rapid pace, realizing impactful and iterative MVP releases in the process.

This approach is critical to scale AI in a manner that can meet its ever-increasing hype cycle. We can and should use engineering best practices and approaches that have helped shepherd prior technologies to more mature and stable states to achieve the same positive outcomes for AI. After all, AI solutions are still software at their core and must be treated that way for successful delivery.

As we will discuss more in subsequent posts, using RUP-inspired systems engineering practices does not mean reverting away from agile processes. In fact, we’ll be highlighting Kanban-inspired sprintless agile approaches as a foundational SDLC concept. Many concepts from RUP, especially Elaboration phase activities, fit nicely and can even gain more lift from modern agile concepts.

What’s Next

Leveraging a RUP Hump Chart-inspired concept as a roadmap, our next posts will highlight how AI engineering generally maps tightly to existing industry terms and approaches, demystifying the array of supposedly new AI-specific concepts into their proven software delivery concepts.

Next post: Who’s Who in the AI Zoo? (Data Scientist, Machine Learning Engineer, and MLOps Engineer and how they map to traditional software delivery roles)

Blog Series Links:

Systems Engineering: the Missing Glue for AI Delivery (this post)

Incepting Artificial Intelligence (AI) Efforts with an AI Systems Engineering Mindset